每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;电报群 https://t.me/OpeningSourceOrg

今日推荐开源项目:《 简洁即正义——Android-KTX》

推荐理由:这是一套用于Android应用开发的 Kotlin 扩展。目的就是为了让我们使用 Kotlin 进行简洁、愉悦、惯用地 Android 开发,而不会增加新的功能。

原理介绍

透过现象看本质,这样使用起来就不会迷惑,而且遇到问题也能方便排查。

Android-ktx主要使用Kotlin语言的几个特性,了解了这些特性后,我们自己也能很方便的进行封装,这样就形成了我们自己的类库,便于自己技术的沉淀。

Extensions

上面的第一个例子,uri的封装就是利用了这个,Kotlin的官方文档也有介绍。

直接看源码就行了。

inline fun String.toUri(): Uri = Uri.parse(this)

其实就是对String做了一个扩展,如果使用Java的就很容易理解,如下所示,这种方式在日常开发中也很容易见到。

public class StringUtil{

public static Uri parse(String uriString) {

return Uri.parse(uriString);

}

}

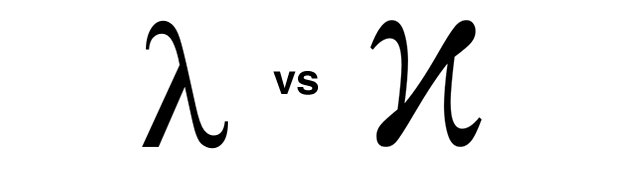

Lambdas

第二个例子主要使用了Lambdas这个特性,Kotlin文档在这里。

还是贴代码,首先对SharedPreferences做了扩展,然后这个扩展函数的参数是一个闭包,当函数最后一个参数是闭包的时候,函数的括号可以直接省略,然后在后面接上闭包就行了。

inline fun SharedPreferences.edit(action: SharedPreferences.Editor.() -> Unit) {

val editor = edit()

action(editor)

editor.apply()

}

Default Arguments

这个特性上面的例子没有,可以单独列举,如下所示。(官方文档介绍)

也是就说,当一个函数中含有多个参数时候,不需要像Java中那样,依次赋值,可以仅仅赋需要的即可,Java中常见的解决的方法是方法重载,挨个传入默认值。

class ViewTest {

private val context = InstrumentationRegistry.getContext()

private val view = View(context)

@Test

fun updatePadding() {

view.updatePadding(top = 10, right = 20)

assertEquals(0, view.paddingLeft)

assertEquals(10, view.paddingTop)

assertEquals(20, view.paddingRight)

assertEquals(0, view.paddingBottom)

}

}

看updatePadding方法定义。

fun View.updatePadding(

@Px left: Int = paddingLeft,

@Px top: Int = paddingTop,

@Px right: Int = paddingRight,

@Px bottom: Int = paddingBottom

) {

setPadding(left, top, right, bottom)

}

对于默认参数,还可以这样玩,比如在Java中,常见的有建造在模式,对每个参数进行赋值,然后创建一个对象,如果使用这种特性,不需要改变的值,可以直接用默认值表示,这样在编码的时候,就会显得很简洁。

优势:

作为Android开发语言,kotlin本身就是一门非常简洁的开发语言,而Android-KTX使得kotlin更加的简洁,代码更加的精简。

我们可以看到官方给出的一组对比:

Kotlin:

val uri = Uri.parse(myUriString)

Kotlin with Android KTX:

val uri = myUriString.toUri()

Kotlin:

sharedPreferences.edit()

.putBoolean("key", value)

.apply()

Kotlin with Android KTX:

sharedPreferences.edit {

putBoolean("key", value)

}

Kotlin:

val pathDifference = Path(myPath1).apply {

op(myPath2, Path.Op.DIFFERENCE)

}

canvas.apply {

val checkpoint = save()

translate(0F, 100F)

drawPath(pathDifference, myPaint)

restoreToCount(checkpoint)

}

Kotlin with Android KTX:

val pathDifference = myPath1 - myPath2

canvas.withTranslation(y = 100F) {

drawPath(pathDifference, myPaint)

}

Kotlin:

view.viewTreeObserver.addOnPreDrawListener(

object : ViewTreeObserver.OnPreDrawListener {

override fun onPreDraw(): Boolean {

viewTreeObserver.removeOnPreDrawListener(this)

actionToBeTriggered()

return true

}

})

Kotlin with Android KTX:

view.doOnPreDraw {

actionToBeTriggered()

}

非常明显的可以看出,Android-KTX使得代码更加的简洁。

Android-KTX在Android框架和支持库上提供了一个良好的API层,这使得代码更加简洁,Android-KTX中支持的Android框架的部分现在可在GitHub库中找到,同时,Google承诺在即将到来的支持库版本中提供涵盖Android支持库的Android-KTX其他部分。

注意:

这个库并不是最终正式版本,可能会有API的规范性改动或者移除,打算用这个来实现一些项目的朋友也注意这一定,毕竟,第一个吃螃蟹的人是有风险的。

不过谷歌表示,现在的预览版本只是一个开始,在接下来的几个月里,他们会根据开发者的反馈和贡献加入 API 进行迭代,当 API 稳定后,Google 会承诺 API 的兼容性,并计划将 Android KTX 作为 Android 支持库的一部分。

关于kotlin:

kotlin是一个由JetBrains开发,基于JVM的编程语言,与Java编程语言100%互通,并具备诸多Java尚不支持的新特性,适用于如今使用Java的所有环境,常用于编写服务器端代码和Android应用。Kotlin还得到了谷歌的支持,已正式成为Android开发一级语言。作为一个简洁的语言,Kotlin支持 lambda,支持闭包,能够减少代码量,提高我们的开发效率。

进入官网了解更多:kotlin官网

今日推荐英文原文:《7 Super Useful Aliases to make your development life easier》作者:Al-min Nowshad

原文链接:https://codeburst.io/7-super-useful-aliases-to-make-your-development-life-easier-fef1ee7f9b73

7 Super Useful Aliases to make your development life easier

npm install --save express

sudo apt-get update

brew cask install docker

Commands like these are our daily routine. Software Developer, Dev ops, Data Scientists, System Admin or in any other profession, we need to play with a few regular commands again and again.

It’s tiresome to write these commands every time we need them ?

Wouldn’t it be better if we could use some kind of shortcuts for these commands?

Meet Your Friend — Alias

What if I tell you that you can use

nis express

Instead of

npm install --save express

Stay with me, we’ll find out how ?

What’s alias ?

It’s a text-only interface for your terminal or shell commands that can be mapped with longer and more complex commands under the hood!

How ?

Open your terminal and type alias then press Enter

You’ll see a list of available aliases on your machine.

If you look closely you’ll find a common pattern to use aliases –

alias alias_name="command_to_run"

So, we’re simply mapping commands with names! (almost)

Let’s Create a few

NOTE: For the purpose of this tutorial, please keep your terminal open and use one terminal to test these aliases. use cd if you need to change directory.

1. Install node Packages

npm install --save packagename ➡ nis packagenameType the command below in your terminal and press

Enter –

alias nis="npm install --save "

Now, we can use nis express to install express inside your node project.

2. Git add and commit

git add . && git commit -a -m "your commit message"

➡ gac "your commit message"

alias gac="git add . && git commit -a -m "

3. Search Through Terminal History

history | grep keyword ➡ hs keyword

alias hs='history | grep'Now, if we need to search thorough our history to find everything with the keyword test we just have to execute hs test to find our expected result.

4. Make and enter inside a directory

mkdir -p test && cd test ➡ mkcd test

alias mkcd='foo(){ mkdir -p "$1"; cd "$1" }; foo '

5. Show my ip address

curl http://ipecho.net/plain ➡ myip

alias myip="curl http://ipecho.net/plain; echo"6. Open file with admin access

sudo vim filename ➡ svim filename

alias svim='sudo vim'7. Update your linux pc/server

sudo apt-get update && sudo apt-get update ➡ update

alias update='sudo apt-get update && sudo apt-get upgrade'

Persistant Aliases

You’ve learnt how to create aliases. Now it’s time to make them persistent throughout your system. Depending on what type of shell/terminal you’re using you need to copy-paste your aliases inside ~/.bashrc or ~/.bashprofile or ~/.zshrc if you’re using zsh as your default terminal.

Example:

As I’m using zsh as my default terminal, I’ve to edit ~/.zshrc file to add my aliases. First of all let’s open it with admin access with sudo vim ~/.zshrc .

Now, I need to paste my alias/aliases like alias hs='history | grep' then exit with saving by entering :wq inside vim

Then to take effect I need to execute source ~/.zshrc and restart my terminal. From now on, the hs command will be available throughout my system ?

Bonus

oh-my-zsh is a great enhancement for your terminal which comes with some default aliases and beautiful interface.

每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;电报群 https://t.me/OpeningSourceOrg