每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;微博:https://weibo.com/openingsource;电报群 https://t.me/OpeningSourceOrg

今日推荐开源项目:《 Go 博客文章列表 Gopher Reading List 》GitHub链接

推荐理由:这是一份关于 Go 的文章列表,从为什么要学习 Go 的新手向文章,到创建Web应用程序和垃圾收集等等的这些需要一些基础的文章都有。相信对于正在学习 Go 的朋友来说这会产生不小的帮助。

今日推荐英文原文:《Data Privacy: Why It Matters and How to Protect Yourself》作者:Petros Koutoupis

原文链接:https://www.linuxjournal.com/content/data-privacy-why-it-matters-and-how-protect-yourself

推荐理由:这篇文章主要介绍的是关于隐私的,包括为什么会泄露隐私以及如何保护自己的隐私,在网络发达的现在,保护自己的隐私是相当有必要的。

Data Privacy: Why It Matters and How to Protect Yourself

When it comes to privacy on the internet, the safest approach is to cut your Ethernet cable or power down your device. But, because you can’t really do that and remain somewhat productive, you need other options. This article provides a general overview of the situation, steps you can take to mitigate risks and finishes with a tutorial on setting up a virtual private network.

Sometimes when you’re not too careful, you increase your risk of exposing more information than you should, and often to the wrong recipients—Facebook is a prime example. The company providing the social-media product of the same name has been under scrutiny recently and for good reason. The point wasn’t that Facebook directly committed the atrocity, but more that a company linked to the previous US presidential election was able to access and inappropriately store a large trove of user data from the social-media site. This data then was used to target specific individuals. How did it happen though? And what does that mean for Facebook (and other social-media) users?

In the case of Facebook, a data analysis firm called Cambridge Analytica was given permission by the social-media site to collect user data from a downloaded application. This data included users’ locations, friends and even the content the users “liked”. The application supposedly was developed to act as a personality test, although the data it mined from users was used for so much more and in what can be considered not-so-legal methods.

At a high level, what does this all mean? Users allowed a third party to access their data without fully comprehending the implications. That data, in turn, was sold to other agencies or campaigns, where it was used to target those same users and their peer networks. Through ignorance, it becomes increasingly easy to “share” data and do so without fully understanding the consequences.

Getting to the Root of the Problem

For some, deleting your social-media account may not be an option. Think about it. By deleting your Facebook account, for example, you may essentially be deleting the platform that your family and friends choose to share some of the greatest events in their lives. And although I continue to throw Facebook in the spotlight, it isn’t the real problem. Facebook merely is taking advantage of a system with zero to no regulations on how user privacy should be handled. Honestly, we, as a society, are making up these rules as we go along.

Recent advancements in this space have pushed for additional protections for web users with an extra emphasis on privacy. Take the General Data Protection Regulation (GDPR), for example. Established by the European Union (EU), the GDPR is a law directly affecting data protection and privacy for all individuals within the EU. It also addresses the export or use of said personal data outside the EU, forcing other regions and countries to redefine and, in some cases, reimplement their services or offerings. This is most likely the reason why you may be seeing updated privacy policies spamming your inboxes.

Now, what exactly does GDPR enforce? Again, the primary objective of GDPR is to give EU citizens back control of their personal data. The compliance deadline is set for May 25, 2018. For individuals, the GDPR ensures that basic identity (name, address and so on), locations, IP addresses, cookie data, health/genetic data, race/ethnic data, sexual orientation and political opinions are always protected. And once the official deadline hits, it initially will affect companies with a presence in an EU country or offering services to individuals living in EU countries. Aside from limiting the control a company would have over your private data, the GDPR also places the burden on the same company to be more upfront and honest with any sort of data breach that could have resulted in the same data from being inappropriately accessed.

Although recent headlines have placed more focus around social-media sites, they are not the only entities collecting data about you. The very same concepts of data collection and sharing even apply to the applications installed on your mobile devices. Home assistants, such as Google Home or Amazon Alexa, constantly are listening. The companies behind these devices or applications stand by their claims that it’s all intended to enrich your experiences, but to date, nothing prevents them from misusing that data—that is, unless other regions and countries follow in the same footsteps as the EU.

Even if those companies harvesting your data don’t ever misuse it, there is still the risk of a security breach (a far too common occurrence) placing that same (and what could be considered private) information about you into the wrong hands. This potentially could lead to far more disastrous results, including identity theft.

Where to Start?

Knowing where to begin is often the most difficult task. Obviously, use strong passwords that have a mix of uppercase and lowercase characters, numbers and punctuation, and don’t use the same password for every online account you have. If an application offers two-factor authentication, use it.

The next step involves reviewing the settings of all your social-media accounts. Limit the information in your user profile, and also limit the information you decide to share in your posts, both public and private. Even if you mark a post as private, that doesn’t mean no one else will re-share it to a more public audience. Even if you believe you’re in the clear, that data eventually could leak out. So, the next time you decide to post about legalizing marijuana or “like” a post about something extremely political or polarizing, that decision potentially could impact you when applying for a new job or litigating a case in court—that is, anything requiring background checks.

The information you do decide to share in your user profile or in your posts doesn’t stop with background checks. It also can be used to give unwanted intruders access to various non-related accounts. For instance, the name of your first pet, high school or the place you met your spouse easily can be mined from your social-media accounts, and those things often are used as security questions for vital banking and e-commerce accounts.

Social-Media-Connected Applications

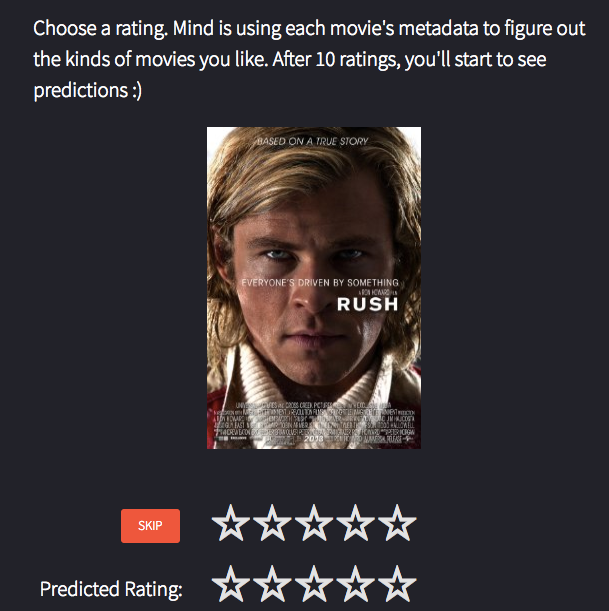

Nowadays, it’s easy to log in to a new application using your social-media accounts. In some cases, you’re coerced or tricked into connecting your account with those applications. Most, if not all, social-media platforms provide a summary of all the applications your account is logged in to. Using Facebook as an example, navigate to your Settings page, and click the Apps Settings page. There you will find such a list (Figure 1).

Figure 1. The Facebook Application Settings Page

As you can see in Figure 1, I’m currently connected to a few accounts, including the Linux Foundation and Super Mario Run. These applications have direct access to my account information, my timeline, my contacts and more.

Applications such as these don’t automatically authenticate you with your social-media account. You need to authorize the application specifically to authenticate you by that account. And even though you may have agreed to it at some point, be sure to visit these sections of your assorted accounts routinely and review what’s there.

So, the next time you are redirected from that social-media site and decide to take that personality quiz or survey to determine what kind of person you are attracted to or even what you would look like as the opposite sex, think twice about logging in using your account. By doing so, you’re essentially agreeing to give that platform access to everything stored in your account.

This is essentially how firms like Cambridge Analytica obtain user information. You never can be too sure of how that information will be used or misused.

Tracking-Based Advertisements

The quickest way for search engines and social-media platforms to make an easy dollar is to track your interests and specifically target related advertisements to you. How often do you search for something on Google or peruse through Facebook or Twitter feeds and find advertisements of a product or service you were looking into the other day? These platforms keep track of your location, your search history and your general activities. Sounds scary, huh? In some cases, your network of friends even may see the products or services from your searches.

To avoid such targeting, you need to rethink how you search the internet. Instead of Google, opt for something like DuckDuckGo. With online stores like Amazon, keep your wish lists and registries private or share them with a select few individuals. In the case of social media, you probably should update your Advertisement Preferences.

Figure 2. The Facebook Advertisement Preferences Page

In some cases, you can completely disable targeted advertisements based on your search or activity history. You also can limit what your network of peers can see.

Understanding What’s at Risk

People often don’t think about privacy matters until something affects them directly. A good way to understand what personal data you risk is to request that same data from a service provider. It is this exact data that the service provider may sell to third parties or even allow external applications to access.

You can request this data from Facebook, via the General Account Settings page. At the very bottom of your general account details, there’s a link appropriately labeled “Download a copy of your Facebook data”.

Figure 3. The Facebook General Account Settings Page

It takes a few minutes to collect everything and compress it into a single .zip file, but when complete, you’ll receive an email with a direct link to retrieve this archive.

When extracted, you’ll see a nicely organized collection of all your assorted activities:

$ ls -l

total 24

drwxrwxr-x 2 petros petros 4096 Mar 24 07:11 html

-rw-r--r-- 1 petros petros 6403 Mar 24 07:01 index.htm

drwxrwxr-x 8 petros petros 4096 Mar 24 07:11 messages

drwxrwxr-x 6 petros petros 4096 Mar 24 07:11 photos

drwxrwxr-x 3 petros petros 4096 Mar 24 07:11 videos

Open the file index.html at the root of the directory (Figure 4).

Figure 4. The Facebook Archive Profile Page

Everything, and I mean everything, you have done with this account is stored and never deleted. All of your Friends history, including blocked and removed individuals is preserved. Every photograph and video uploaded and every private message sent via Messenger is forever retained. Every advertisement you clicked (and in turn every advertiser that has your contact information) and possibly even more is recorded. I don’t even know 90% of the advertisers appearing on my list, nor have I ever agreed to share information with them. I also can tell that a lot of them aren’t even from this country. For instance, why does a German division of eBay have my information through Facebook when I use the United States version of eBay and always have since at least 1999? Why does Sally Beauty care about who I am? Last time I checked, I don’t buy anything through that company (I am not real big into cosmetics or hair-care products).

It’s even been reported that when Facebook is installed on your mobile device, it can and will log all details pertaining to your phone calls and text messages (names, phone numbers, duration of call and so on).

Mobile Devices

I’ve already spent a great deal of time focusing on social media, but data privacy doesn’t end there. Another area of concern is around mobile computing. It doesn’t matter which mobile operating system you are running (Android or iOS) or which mobile hardware you are using (Samsung, Huawei, Apple and so on). The end result is the same. Several mobile applications, when installed, are allowed unrestricted access to more data than necessary.

With this in mind, I went to the Google Play store and looked up the popular Snapchat app. Figure 5 shows a summary of everything Snapchat needed to access.

Figure 5. Access Requirements for a Popular Mobile Application

A few of these categories make sense, but some of the others leave you wondering. For instance, why does Snapchat need to know about my “Device ID & call information” or my WiFi and Bluetooth connection information? Why does it need to access my SMS text messages? What do applications like Snapchat do with this collected data? Do they find ways to target specific products and features based on your history or do they sell it to third parties?

Mobile devices often come preinstalled with software or preconfigured to store or synchronize your personal data with a back-end cloud storage service. This software may be provided by your cellular service provider, the hardware product manufacturer or even by the operating system developer. Review those settings and disable anything that does not meet your standards. Even if you rely on Google to synchronize your photographs and videos to your Google Drive or Photos account, restrict which folders or subdirectories are synchronized.

Want to take this a step further? Think twice about enabling fingerprint authentication. Hide all notifications on your lock screen. Disable location tracking activity. Encrypt your phone.

What about Local Privacy?

There is more. You also need to consider local privacy. There is a certain recipe you always should follow.

Passwords

Use good passwords. Do not enable auto-login, and also disable root logins for SSH. Enable your screen lock when the system is idle for a set time and when the screensaver takes over.

Encryption

Encrypting your home directory or even the entire disk drive limits the possibility that unauthorized individuals will gain access to your personal data while physically handing the device. Most modern Linux distributions offer the option to enable this feature during the installation process. It’s still possible to encrypt an existing system, but before you decide to undertake this often risky endeavor, make sure you first back up anything that’s considered important.

Applications

Review your installed applications. Keep things to a minimum. If you don’t use it, uninstall it. This can help you in at least three ways:

- It will reduce overall clutter and free up storage space on your local hard drive.

- You’ll be less at risk of hosting a piece of software with bugs or security vulnerabilities, reducing the potential of your system being compromised in some form or another.

- There is less of a chance that the same software application is collecting data that it shouldn’t be collecting in the first place.

System Updates

Keep your operating system updated at all times. Major Linux distributions are constantly pushing updates to existing packages that address both software defects and security vulnerabilities.

HTTP vs. HTTPS

Establish secure connections when browsing the internet. Pay attention when transferring data. Is it done over HTTP or HTTPS? (The latter is the secured method.) The last thing you need is for your login credentials to be transferred as plain text to an unsecured website. That’s why so many service providers are securing your platforms for HTTPS, where all requests made are encrypted.

Web Browsers

Use the right web browser. Too many web browsers are less concerned with your privacy and more concerned with your experience. Take the time to review your browsing requirements, and if you need to run in private or “incognito” mode or just adopt a new browser more focused on privacy, take the proper steps to that.

Add-ons

While on the topic of web browsers, review the list of whatever add-ons are installed and configured. If an add-on is not in use or sounds suspicious, it may be safe to disable or remove it completely.

Script Blockers

Script blockers (NoScript, AdBlock and so on) can help by preventing scripts embedded on websites from tracking you. A bit of warning: these same programs can and may even ruin your experiences with a large number of websites visited.

Port Security

Review and refine your firewall rules. Make sure you drop anything coming in that shouldn’t be intruding in the first place. This may even be a perfect opportunity to discover what local services are listening on external ports (via netstat -lt). If you find that these services aren’t necessary, turn them off.

Securing Connections

Every device connecting over a larger network is associated with a unique address. The device obtains this unique address from the networking router or gateway to which it connects. This address commonly is referred to as that device’s IP (internet protocol) address. This IP address is visible to any website and any server you visit. You’ll always be identified by this address while using this device.

It’s through this same address that you’ll find advertisements posted on various websites and in various search engines rendered in the native language of the country in which you live. Even if you navigate to a foreign website, this method of targeting ensures that the advertisements posted on that site cater to your current location.

Relying on IP addresses also allows some websites or services to restrict access to visitors from specific countries. The specific range of the address will point to your exact country on the world map.

Virtual Private Network

An easy way to avoid this method of tracking is to rely on the use of Virtual Private Networks (VPNs). It is impossible to hide your IP address directly. You wouldn’t be able to access the internet without it. You can, however, pretend you’re using a different IP address, and this is where the VPN helps.

A VPN extends a private network across a public network by enabling its users to send/receive data across the public network as if their device were connected directly to the private network. There exists hundreds of VPN providers worldwide. Choosing the right one can be challenging, but providers offer their own set of features and limitations, which should help shrink that list of potential candidates.

Let’s say you don’t want to go with a VPN provider but instead want to configure your own VPN server. Maybe that VPN server is located somewhere in a data center and nowhere near your personal computing device. For this example, I’m using Ubuntu Server 16.04 to install OpenVPN and configure it as a server. Again, this server can be hosted from anywhere: in a virtual machine in another state or province or even in the cloud (such as AWS EC2). If you do host it on a cloud instance, be sure you set that instance’s security group to accept incoming/outgoing UDP packets on port 1194 (your VPN port).

The Server

Log in to your already running server and make sure that all local packages are updated:

$ sudo apt-get update && sudo apt-get upgrade

Install the openvpn and easy-rsa packages:

$ sudo apt-get install openvpn easy-rsa

Create a directory to set up your Certificate Authority (CA) certificates. OpenVPN will use these certificates to encrypt traffic between the server and client. After you create the directory, change into it:

$ make-cadir ~/openvpn-ca

$ cd ~/openvpn-ca/

Open the vars file for editing and locate the section that contains the following parameters:

# These are the default values for fields

# which will be placed in the certificate.

# Don't leave any of these fields blank.

export KEY_COUNTRY="US"

export KEY_PROVINCE="CA"

export KEY_CITY="SanFrancisco"

export KEY_ORG="Fort-Funston"

export KEY_EMAIL="[email protected]"

export KEY_OU="MyOrganizationalUnit"

# X509 Subject Field

export KEY_NAME="EasyRSA"

Modify the fields accordingly, and for the KEY_NAME, let’s define something a bit more generic like “server” Here’s an example:

# These are the default values for fields

# which will be placed in the certificate.

# Don't leave any of these fields blank.

export KEY_COUNTRY="US"

export KEY_PROVINCE="IL"

export KEY_CITY="Chicago"

export KEY_ORG="Linux Journal"

export KEY_EMAIL="[email protected]"

export KEY_OU="Community"

# X509 Subject Field

export KEY_NAME="server"

Export the variables:

$ source vars

NOTE: If you run ./clean-all, I will be doing a rm -rf

↪on /home/ubuntu/openvpn-ca/keys

Clean your environment of old keys:

$ ./clean-all

Build a new private root key, choosing the default options for every field:

$ ./build-ca

Next build a new private server key, also choosing the default options for every field (when prompted to input a challenge password, you won’t input anything for this current example):

$ ./build-key-server server

Toward the end, you’ll be asked to sign and commit the certificate. Type “y” for yes:

Certificate is to be certified until Mar 29 22:27:51 2028

↪GMT (3650 days)

Sign the certificate? [y/n]:y

1 out of 1 certificate requests certified, commit? [y/n]y

Write out database with 1 new entries

Data Base Updated

You’ll also generate strong Diffie-Hellman keys:

$ ./build-dh

To help strengthen the server’s TLS integrity verification, generate an HMAC signature:

$ openvpn --genkey --secret keys/ta.key

So, you’ve finished generating all the appropriate server keys, but now you need to generate a client key for your personal machine to connect to the server. To simplify this task, create this client key from the same server where you generated the server’s keys.

If you’re not in there already, change into the same ~/openvpn-ca directory and source the same vars file from earlier:

$ cd ~/openvpn-ca

$ source vars

Generate the client certificate and key pair, again choosing the default options, and for the purpose of this example, avoid setting a challenge password:

$ ./build-key client-example

As with the server certificate/key, again, toward the end, you’ll be asked to sign and commit the certificate. Type “y” for yes:

Certificate is to be certified until Mar 29 22:32:37 2028

↪GMT (3650 days)

Sign the certificate? [y/n]:y

1 out of 1 certificate requests certified, commit? [y/n]y

Write out database with 1 new entries

Data Base Updated

Change into the keys subdirectory and copy the keys you generated earlier over to the /etc/openvpn directory:

$ cd keys/

$ sudo cp ca.crt server.crt server.key ta.key

↪dh2048.pem /etc/openvpn/

Extract the OpenVPN sample server configuration file to the /etc/openvpn directory:

$ gunzip -c /usr/share/doc/openvpn/examples/sample-config-files/

↪server.conf.gz |sudo tee /etc/openvpn/server.conf

Let’s use this template as a starting point and apply whatever required modifications are necessary to run the VPN server application. Using an editor, open the /etc/openvpn/server.conf file. The fields you’re most concerned about are listed below:

;tls-auth ta.key 0 # This file is secret

[ ... ]

;cipher BF-CBC # Blowfish (default)

;cipher AES-128-CBC # AES

;cipher DES-EDE3-CBC # Triple-DES

[ ... ]

;user nobody

;group nogroup

Uncomment and add the following lines:

tls-auth ta.key 0 # This file is secret

key-direction 0

[ ... ]

;cipher BF-CBC # Blowfish (default)

cipher AES-128-CBC # AES

auth SHA256

;cipher DES-EDE3-CBC # Triple-DES

[ ... ]

user nobody

group nogroup

You’ll need to enable IPv4 packet forwarding via sysctl. Uncomment the field net.ipv4.ip_forward=1 in /etc/sysctl.conf and reload the configuration file:

$ sudo sysctl -p

net.ipv4.ip_forward = 1

If you’re running a firewall, UDP on port 1194 will need to be open at least to the public IP address of the client machine. Once you do this, start the server application:

$ sudo systemctl start openvpn@server

And if you wish, configure it to start automatically every time the system reboots:

$ sudo systemctl enable openvpn@server

Finally, create the client configuration file. This will be the file the client will use every time it needs to connect to the VPN server machine. To do this, create a staging directory, set its permissions accordingly and copy a client template file into it:

$ mkdir -p ~/client-configs/files

$ chmod 700 ~/client-configs/files/

$ cp /usr/share/doc/openvpn/examples/sample-config-files/client.conf

↪~/client-configs/base.conf

Open the ~/client-configs/base.conf file in an editor and locate the following areas:

remote my-server-1 1194

;remote my-server-2 1194

[ ... ]

ca ca.crt

cert client.crt

key client.key

[ ... ]

;cipher x

The variables should look something like this:

remote <public IP of server> 1194

;remote my-server-2 1194

[ ... ]

#ca ca.crt

#cert client.crt

#key client.key

[ ... ]

cipher AES-128-CBC

auth SHA256

key-direction 1

# script-security 2

# up /etc/openvpn/update-resolv-conf

# down /etc/openvpn/update-resolv-conf

The remote server IP will need to be adjusted to reflect the public IP address of the VPN server. Be sure to adjust the cipher while also adding the auth and the key-direction variables. Also append the commented script-security and update-resolv-conf lines. Now, generate the OVPN file:

cat ~/client-configs/base.conf \

<(echo -e '<ca>') \

~/openvpn-ca/keys/ca.crt \

<(echo -e '</ca>\n<cert>') \

~/openvpn-ca/keys/client-example.crt \

<(echo -e '</cert>\n<key>') \

~/openvpn-ca/keys/client-example.key \

<(echo -e '</key>\n<tls-auth>') \

~/openvpn-ca/keys/ta.key \

<(echo -e '</tls-auth>') \

> ~/client-configs/files/client-example.ovpn

You should see the newly created file located in the ~/client-configs/files subdirectory:

$ ls ~/client-configs/files/

client-example.ovpn

The Client

Copy the OVPN file to the client machine (your personal computing device). In the example below, I’m connected to my client machine and using SCP, transferring the file to my home directory:

$ scp petros@openvpn-server:~/client-configs/files/

↪client-example.ovpn ~/

client-example.ovpn 100% 13KB 12.9KB/s 00:00

Install the OpenVPN package:

$ sudo apt-get install openvpn

If the /etc/openvpn/update-resolv-conf file exists, open your OVPN file (currently in your home directory) and uncomment the following lines:

script-security 2

up /etc/openvpn/update-resolv-conf

down /etc/openvpn/update-resolv-conf

Connect to the VPN server by pointing to the client configuration file:

$ sudo openvpn --config client-example.ovpn

While you’re connected, you’ll be browsing the internet with the public IP address used by the VPN server and one that was not assigned by your Internet Service Provider (ISP).

Summary

How does it feel to have your locations, purchasing habits, preferred reading content, search history (including health and illness), political views and more shared with an unknown number of recipients across this mysterious thing we call the internet? It probably doesn’t feel very comforting. This might be information we typically would not want our closest family and friends to know, so why would we want strangers to know it instead? It is far too easy to be complacent and allow such personal data mining to take place. Retaining true anonymity while also enjoying the experiences of the modern web is definitely a challenge. Although, it isn’t impossible.

每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;微博:https://weibo.com/openingsource;电报群 https://t.me/OpeningSourceOrg