每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;微博:https://weibo.com/openingsource;电报群 https://t.me/OpeningSourceOrg

今日推荐开源项目:《面试与黑话 FETopic》传送门:GitHub链接

推荐理由:这个项目中收集了各种各样的面试问题,当然是技术上的,然后还有各种面试中的花样操作,比如问问题和自我介绍等等,这还不算重点,重点是里面还误打误撞的收录到了一份面试黑话全集,这个应该没有拒绝的理由了吧,看一看总是没有错的,毕竟只有把你和面试官的思维频率同调了才能更好的对话不是吗。

今日推荐英文原文:《How to make a beautiful, tiny npm package and publish it》作者:Jonathan Wood

原文链接:https://medium.freecodecamp.org/how-to-make-a-beautiful-tiny-npm-package-and-publish-it-2881d4307f78

推荐理由:顾名思义,今天则是讲如何制作 npm 包的,如果想要先了解 Node.js 中的 npm 的话,可以去看看昨天的日报

How to make a beautiful, tiny npm package and publish it

If you’ve created lots of npm modules, you can skip ahead. Otherwise, we’ll go through a quick intro.

TL;DR

An npm module only requires a package.json file with name and version properties.

Hey!

There you are.

Just a tiny elephant with your whole life ahead of you.

You’re no expert in making npm packages, but you’d love to learn how.

All the big elephants stomp around with their giant feet, making package after package, and you’re all like:

“I can’t compete with that.”

Well I’m here to tell that you you can!

No more self doubt.

Let’s begin!

You’re not an Elephant

I meant that metaphorically.

Ever wondered what baby elephants are called?

Of course you have. A baby elephant is called a calf.

I believe in you

Self doubt is real.

That’s why no one ever does anything cool.

You think you won’t succeed, so instead you do nothing. But then you glorify the people doing all the awesome stuff.

Super ironic.

That’s why I’m going to show you the tiniest possible npm module.

Soon you’ll have hoards of npm modules flying out of your finger tips. Reusable code as far as the eye can see. No tricks — no complex instructions.

The Complex Instructions

I promised I wouldn’t…

…but I totally did.

They’re not that bad. You’ll forgive me one day.

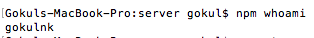

Step 1: npm account

You need one. It’s just part of the deal.

Step 2: login

Did you make an npm account?

Yeah you did.

Cool.

I’m also assuming you can use the command line / console etc. I’m going to be calling it the terminal from now on. There’s a difference apparently.

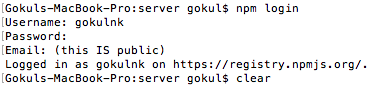

Go to your terminal and type:

npm adduser

You can also use the command:

npm login

Pick whichever command jives with you.

You’ll get a prompt for your username, password and email. Stick them in there!

You should get a message akin to this one:

Logged in as bamblehorse to scope @username on https://registry.npmjs.org/.

Nice!

Let’s make a package

First we need a folder to hold our code. Create one in whichever way is comfortable for you. I’m calling my package tiny because it really is very small. I’ve added some terminal commands for those who aren’t familiar with them.

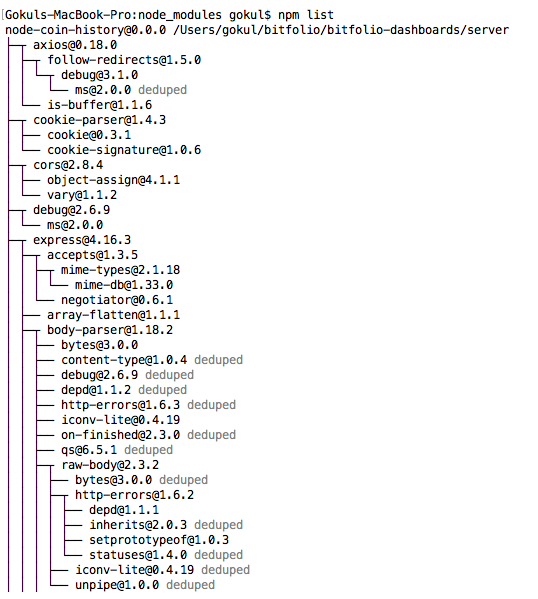

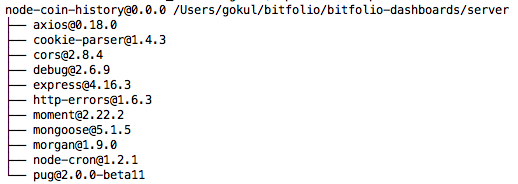

md tiny

In that folder we need a package.json file. If you already use Node.js — you’ve met this file before. It’s a JSON file which includes information about your project and has a plethora of different options. In this tutorial, we are only going to focus on two of them.

cd tiny && touch package.json

How small can it really be, though?

Really small.

All tutorials about making an npm package, including the official documentation, tell you to enter certain fields in your package.json. We’re going to keep trying to publish our package with as little as possible until it works. It’s a kind of TDD for a minimal npm package.

Please note: I’m showing you this to demonstrate that making an npm package doesn’t have to be complicated. To be useful to the community at large, a package needs a few extras, and we’ll cover that later in the article.

Publishing: First attempt

To publish your npm package, you run the well-named command: npm publish.

So we have an empty package.json in our folder and we’ll give it a try:

npm publish

Whoops!

We got an error:

npm ERR! file package.json npm ERR! code EJSONPARSE npm ERR! Failed to parse json npm ERR! Unexpected end of JSON input while parsing near '' npm ERR! File: package.json npm ERR! Failed to parse package.json data. npm ERR! package.json must be actual JSON, not just JavaScript. npm ERR! npm ERR! Tell the package author to fix their package.json file. JSON.parse

npm doesn’t like that much.

Fair enough.

Publishing: Strike two

Let’s give our package a name in the package.json file:

{

"name": "@bamlehorse/tiny"

}

You might have noticed that I added my npm username onto the beginning.

What’s that about?

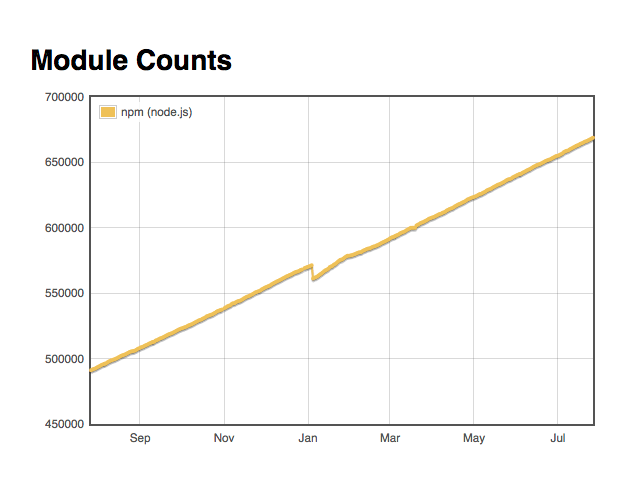

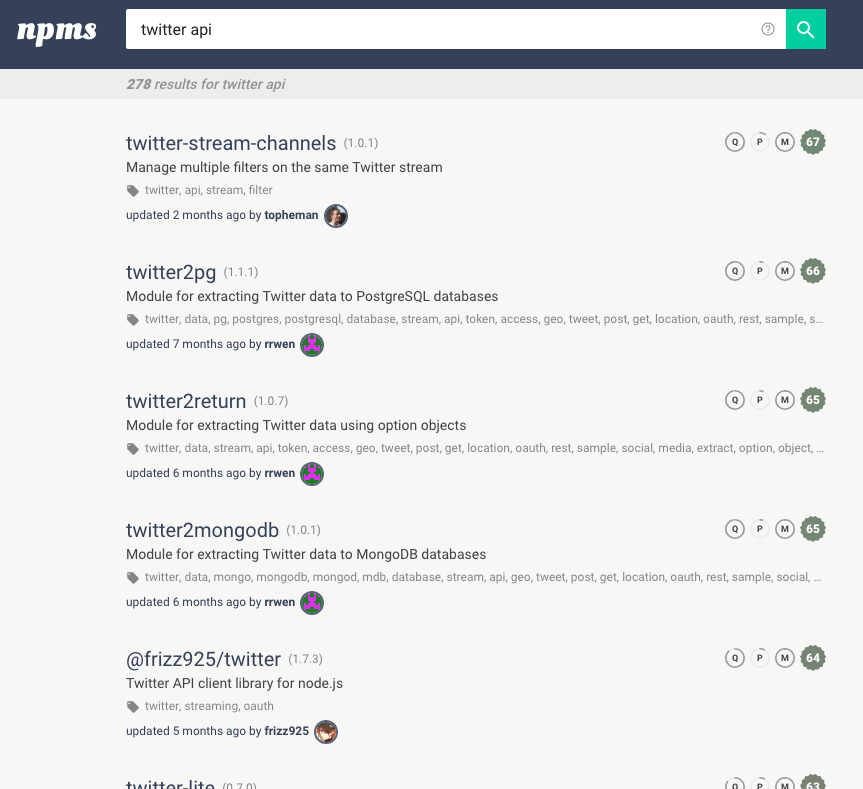

By using the name @bamblehorse/tiny instead of just tiny, we create a package under the scope of our username. It’s called a scoped package. It allows us to use short names that might already be taken, for example the tiny package already exists in npm.

You might have seen this with popular libraries such as the Angular framework from Google. They have a few scoped packages such as @angular/core and @angular/http.

Pretty cool, huh?

We’ll try and publish a second time:

npm publish

The error is smaller this time — progress.

npm ERR! package.json requires a valid “version” field

Each npm package needs a version so that developers know if they can safely update to a new release of your package without breaking the rest of their code. The versioning system npm using is called SemVer, which stands for Semantic Versioning.

Dont worry too much about understanding the more complex version names but here’s their summary of how the basic ones work:

Given a version number MAJOR.MINOR.PATCH, increment the:

1. MAJOR version when you make incompatible API changes,

2. MINOR version when you add functionality in a backwards-compatible manner, and

3. PATCH version when you make backwards-compatible bug fixes.

Additional labels for pre-release and build metadata are available as extensions to the MAJOR.MINOR.PATCH format.

Publishing: The third try

We’ll give our package.json the version: 1.0.0 — the first major release.

{

"name": "@bamblehorse/tiny",

"version": "1.0.0"

}

Let’s publish!

npm publish

Aw shucks.

npm ERR! publish Failed PUT 402 npm ERR! code E402 npm ERR! You must sign up for private packages : @bamblehorse/tiny

Allow me to explain.

Scoped packages are automatically published privately because, as well as being useful for single users like us, they are also utilized by companies to share code between projects. If we had published a normal package, then our journey would end here.

All we need to change is to tell npm that actually we want everyone to use this module — not keep it locked away in their vaults. So instead we run:

npm publish --access=public

Boom!

+ @bamblehorse/[email protected]

We receive a plus sign, the name of our package and the version.

We did it — we’re in the npm club.

I’m excited.

You must be excited.

Did you catch that?

npm loves you

Cute!

Version one is out there!

Let’s regroup

If we want to be taken seriously as a developer, and we want our package to be used, we need to show people the code and tell them how to use it. Generally we do that by putting our code somewhere public and adding a readme file.

We also need some code.

Seriously.

We have no code yet.

GitHub is a great place to put your code. Let’s make a new repository.

README!

I got used to typing README instead of readme.

You don’t have to do that anymore.

It’s a funny convention.

We’re going to add some funky badges from shields.io to let people know we are super cool and professional.

Here’s one that let’s people know the current version of our package:

This next badge is interesting. It failed because we don’t actually have any code.

We should really write some code…

License to code

That title is definitely a James Bond reference.

I actually forgot to add a license.

A license just let’s people know in what situations they can use your code. There are lots of different ones.

There’s a cool page called insights in every GitHub repository where you can check various stats — including the community standards for a project. I’m going to add my license from there.

Then you hit this page:

The Code

We still don’t have any code. This is slightly embarrassing.

Let’s add some now before we lose all credibility.

module.exports = function tiny(string) {

if (typeof string !== "string") throw new TypeError("Tiny wants a string!");

return string.replace(/\s/g, "");

};

There it is.

A tiny function that removes all spaces from a string.

So all an npm package requires is an index.js file. This is the entry point to your package. You can do it in different ways as your package becomes more complex.

But for now this is all we need.

Are we there yet?

We’re so close.

We should probably update our minimal package.json and add some instructions to our readme.md.

Otherwise nobody will know how to use our beautiful code.

package.json

{

"name": "@bamblehorse/tiny",

"version": "1.0.0",

"description": "Removes all spaces from a string",

"license": "MIT",

"repository": "bamblehorse/tiny",

"main": "index.js",

"keywords": [

"tiny",

"npm",

"package",

"bamblehorse"

]

}

We’ve added:

- description: a short description of the package

- repository: GitHub friendly — so you can write username/repo

- license: MIT in this case

- main: the entry point to your package, relative to the root of the folder

- keywords: a list of keywords used to discover your package in npm search

readme.md

@bamblehorse/tiny

npm (scoped) npm bundle size (minified)

Removes all spaces from a string.

Install

$ npm install @bamblehorse/tiny

Usage

const tiny = require("@bamblehorse/tiny");

tiny("So much space!");

//=> "Somuchspace!"

tiny(1337);

//=> Uncaught TypeError: Tiny wants a string!

// at tiny (<anonymous>:2:41)

// at <anonymous>:1:1

We’ve added instructions on how to install and use the package. Nice!

If you want a good template for your readme, just check out popular packages in the open source community and use their format to get you started.

Done

Let’s publish our spectacular package.

Version

First we’ll update the version with the npm version command.

This is a major release so we type:

npm version major

Which outputs:

v2.0.0

Publish!

Let’s run our new favorite command:

npm publish

It is done:

+ @bamblehorse/[email protected]

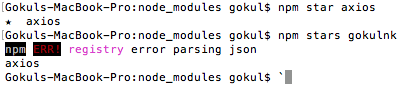

Cool stuff

Package Phobia gives you a great summary of your npm package. You can check out each file on sites like Unpkg too.

Thank you

That was a wonderful journey we just took. I hope you enjoyed it as much as I did.

Please let me know what you thought!

Star the package we just created here:

★ Github.com/Bamblehorse/tiny ★

每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;微博:https://weibo.com/openingsource;电报群 https://t.me/OpeningSourceOrg