开源日报 每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,坚持阅读《开源日报》,保持每日学习的好习惯。

今日推荐开源项目:《网页里写 Markdown remark》

今日推荐英文原文:《Humans, Machines, and the Future of Education》

今日推荐开源项目:《网页里写 Markdown remark》传送门:

GitHub链接

推荐理由:兴许你觉得把 Markdown 一个个分开写太麻烦了,你只是想简单的写一个 Markdown 的幻灯片来展示,那么这个项目就能够起到作用了。这个项目让你能够在 HTML 文件里直接将 textarea 标签作为 Markdown 的区域来编写,你需要的样式也在上面加进去,然后再调用函数将其转换为幻灯片,最后在浏览器里打开,就能够开始展示了。

今日推荐英文原文:《Humans, Machines, and the Future of Education》作者:Jonathan Follett

原文链接:https://towardsdatascience.com/humans-machines-and-the-future-of-education-b059430de7c

推荐理由:人工智能的加入兴许会让教育的效果更好——更个性化的学习方向,或是更好的学习体验。

Humans, Machines, and the Future of Education

Figure 01: Education became formalized to accelerate our understanding of difficult, complicated, or abstract topics. [Illustration: “The School Teacher”, Albrecht Dürer, 1510 woodcut, National Gallery of Art, Open Access]

How do humans learn? Our first learning is experiential: responding to the interactions between ourselves and the womb around us. Experiential learning even extends beyond the womb, to our first teachers — our mother and other people who imprint upon us in ways intentional or not — singing a song and moving themselves. In fact, much of the learning we’ve done over human history is this stimulus-response model of simply living, and, in the process, continuing to learn. This inherent model of experiential learning is well and fine for any number of things — such as how to act toward other people, how to hoe a row, or where to find fresh water. It is less effective, however, in helping us master more nuanced things such as grammar, philosophy, and chemistry. And it is entirely inadequate to teach us highly complex intellectual fields, such as theoretical physics. That is one reason why education became formalized — to accelerate, or even merely make possible, our understanding of difficult, complicated, or abstract topics.

In the west, formal education reached its modern pinnacle in the university, whose origins go back to the 11th century in Bologna, Italy. Rooted in Christian education and an extension of monastic education — which itself was adopted from Buddhist tradition and the far east — higher education was, historically, an oasis of knowledge for the privileged few. Integrated into cities such as Paris, Oxford, and Prague, while the university was its own place and space, it lived within and as part of a larger city and community. The focus was on the Arts, a term used very differently than we think about it today and including all or a subset of seven Arts: arithmetic, geometry, astronomy, music theory, grammar, logic, and rhetoric.

The university experience of today looks very different. The number of subjects has exploded, with “Arts and Sciences” representing just one “college” within a university — and one that, for some decades, has been diminished in importance compared to more practical and applied knowledge such as business and engineering. A higher proportion of citizens than ever now attend an institute of higher learning, with knowledge having been democratized substantially even as it becomes increasingly essential to merely eking out a simple living. And, in most cases, the religious roots of universities in general or your school in particular have been hidden or downplayed as our world becomes increasingly secular. These changes have taken place in different paces and ways over about a thousand years. While the differences are significant and reflect the ways in which knowledge and civilization have evolved, given the magnitude of time involved, we might even consider the changes modest.

Figure 02: Historically, an oasis of knowledge for the privileged few, the university was integrated into cities such as Paris, Oxford, and Prague. [Illustration: “University”, Themistocles von Eckenbrecher, 1890 drawing, National Gallery of Art, Open Access]

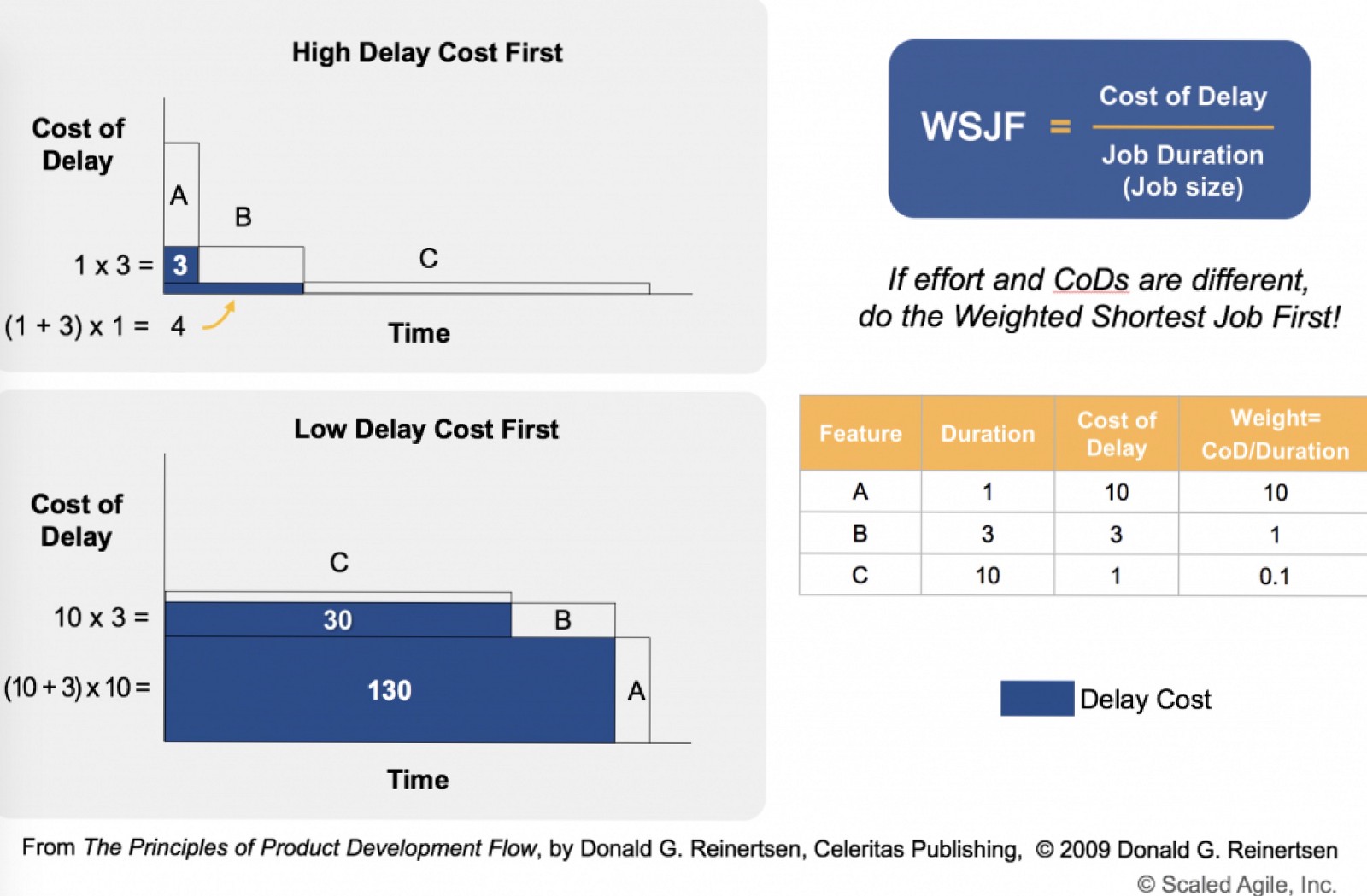

Of course, in light of the rapid changes in technology of recent decades, and its effects both economically and socially, formal education is woefully out-of-date. Designed in an analog world and forcing everyone to take the same curriculum, where different subjects were taught more-or-less the same way, formal education is conducted clumsily at best. A variety of different philosophies and pedagogy have emerged that introduce different methods of learning, but these are not evenly distributed. The standard method of education can be mind-numbingly rote — the cavernous lecture halls with hundreds of students for each professor. Regardless of these barriers, we aspire to a better way. The next wave of significant innovation in education looks like it will be extreme. In the upcoming decades we can expect the confluence of AI and other emerging technologies, and ever-increasing knowledge about ourselves, the human animal — how we learn, how we live, how we participate in a society of connected people — to revolutionize education.

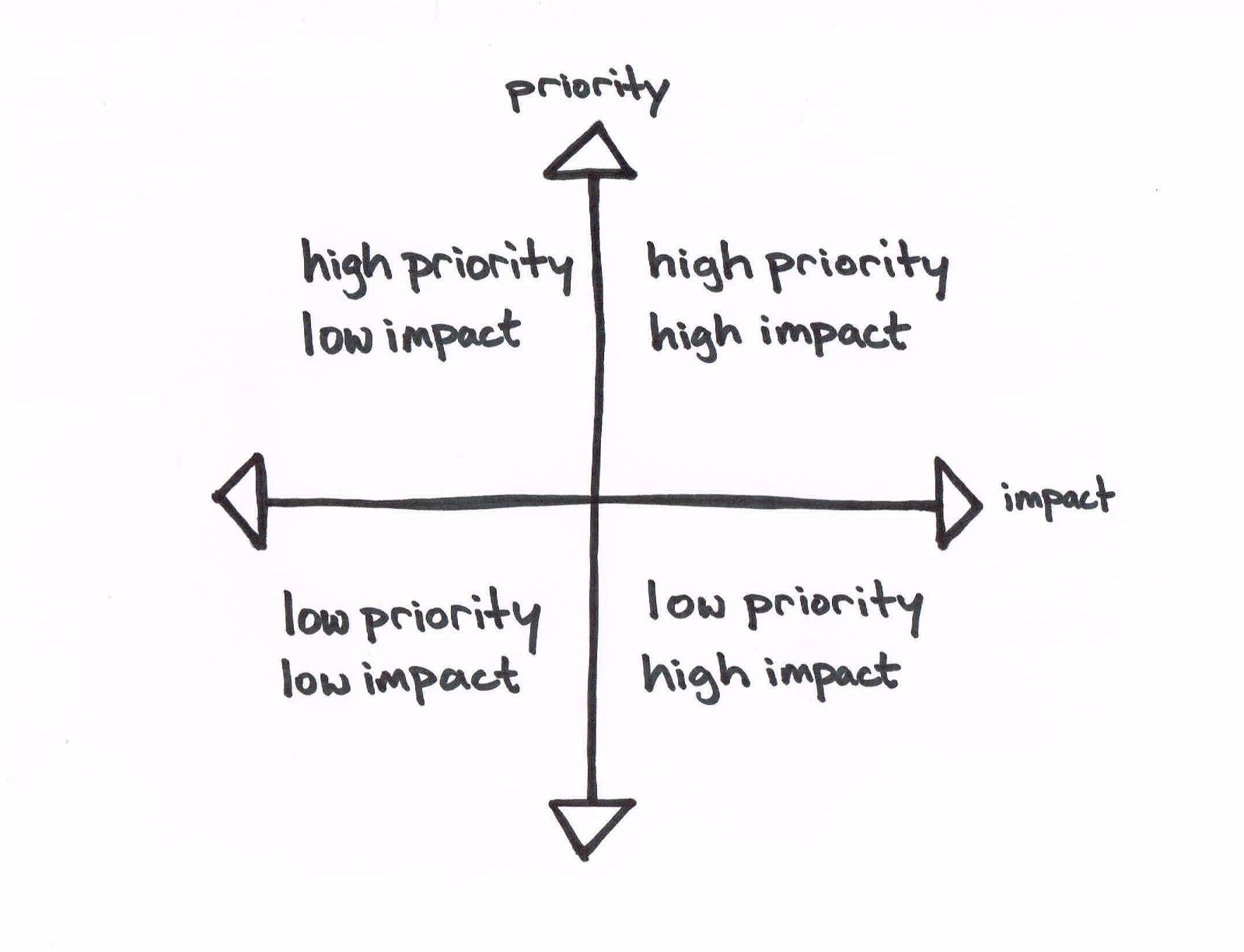

How will education change in a world of emerging technologies? How will AI assist, enhance, administer, regulate, and otherwise alter the complex interactions that drive human learning? By examining some of the gaps in the current model of formal education, we can see some of the places where such technology can and will play a significant role. In fact, the breadcrumbs for these changes are already here, right before us.

AI and the Path to a Personalized Curriculum

Ben Nelson, the Founder of Minerva Schools at KGI, espouses a connected education, one that leverages the information sharing power of technology, with a systems thinking approach that sees the importance of connecting each part of the experience together. Minerva Schools’ philosophy and structure is an inspiring glimpse into the future of education. “In the education system of the future, online artificial intelligence and virtual reality platforms will become very important in the transmission of knowledge. Education should teach us to be more flexible and provide tools for transformation,” says Nelson, during a panel discussion on the future of education organized by ESADE Business and Law School. According to Nelson, such a paradigm shift “will lead to a more personalised learning experience, a better user experience for each student.”

Some of the first steps in customizing curriculum on a per student basis are happening in the field already. There are a number of companies developing AI-driven software for the education market with this purpose in mind. And while the artificial intelligence may provide an initial assessment and learning assignments, there is an important element of human collaboration as well. Teachers can work in concert with these tools, and can modify and even override the recommendations to better suit the student. For example, Knewton, a New York based e-learning company, uses AI-enabled adaptive learning technology to identify gaps in a student’s existing knowledge and provide the most appropriate coursework for a variety of subjects which include math, chemistry, statistics, and economics. More than 14 million students have already taken Knewton’s courses. EdTech software from Cognii, uses conversation — driven by an AI-powered virtual learning assistant — to tutor students and provide feedback in real time. The Cognii virtual assistant is customized to each student’s needs. And math focused Carnegie Learning from Pittsburgh has developed an intelligent instruction design that uses AI to deliver to students the right topics at the right time, enabling a completely personalized cycle of learning, testing, and feedback. These examples, while nascent, are indicative of a push towards AI-enabled personalized learning, which over time will change the face of formal education.

Optimizing the Educational Experience, From Passive to Active Learning

Personalization, however, is just the tip of the iceberg when it comes to changes in the delivery of education. For insight into how formal education will be further transformed in the future, we spoke with Nelson about Minerva School’s learning philosophy: “If you think about a traditional education in a college or university, you think about it in a conglomeration of really independent units,” says Nelson. “You take 30 courses while you’re in school. There are 30 different professors who teach these courses. Those professors really don’t coordinate with one another very much. In fact, they have no idea what the makeup of their student body is, within their particular class. Maybe certain students will have taken courses XYZ. Other students will have taken courses ABC. … The nature of that education is very much on a unitized basis.”

Nelson sees the university approach — a lecture-based format oriented towards the dissemination of information — as one desperately in need of innovation. “Students come in [and] a professor speaks for all or the overwhelming majority of the time. Even when a professor will take questions, the lecture effectively passes from one professor to one student. The majority of students are sitting passively in class,” says Nelson. “There are two problems with these two models. Curricularly, from a curricular design perspective, the world doesn’t work in discrete parts of subject based knowledge.”

“The world isn’t divided into physics in isolation from biology in most cases, or politics isolated from economics, in all cases, etc. The learning of discrete pieces of information isn’t very much related to the way the world works. It’s also, by the way, not related to the way people think.”

“When we think about somebody who is wise or can think about appropriate applications of practical knowledge to particular situations, we think of somebody who has learned lessons in one context, and applies them to another,” says Nelson. “When you deliver education is discrete packages, it turns out the brain has a very hard time with understanding that. Secondly, when you’re sitting in an environment where you’re passively receiving information, the retention in the brain of that information is minuscule. Study after study has shown that a typical test and lecture based class, within six months at the end of the semester, students have forgotten 90% of what they knew during the final, which basically means it’s ineffective. [At Minerva,] we change both of these aspects.”

Nelson describes Minerva’s approach to curriculum architecture, which, is supported and delivered by their tech platform. “First, we create a curriculum and a delivery mechanism that ensures that your education isn’t looked at on a course by course basis, but is looked at from a curricula perspective. The way we do that is that we codify dozens of different elements, learning objectives that we refer to as habits of mind or foundational concepts. Habits of mind are things that become automatic with practice. Foundational concepts are things that are generative, things that once you learn, you can build off of in many different ways. Then these learning objectives get introduced in one course in a particular context. They then get presented in different contexts in the very same course, and then they show up in courses throughout the curriculum in new contexts again, until you have learned generalizable learning objectives. [This] means that you have learned things conceptually and the ways to apply them practically in multiple contexts, which means that when you encounter original situations, original contexts, you’ll be able to know what to do in those situations.”

“The piece of technology that we have deployed in part, but are going to continue to build on and work on the very near future is this idea of the scaffolded curriculum — introducing a particular learning objective, and then tracking how that learning objective is applied and mastered across 30 different professors in four years,” says Nelson. “This doesn’t sound like such a radical improvement, but it fundamentally changes the nature of education.”

“That, by the way, can only be done with technology,” says Nelson. “Without technology, you cannot track individual student progress and modify their personalized intellectual development in a classroom environment. You need to have the data. You need to have data in a way the professor can react and do something with it. Without technology, it’s just impossible. It’s impossible to collect the data. It’s impossible to disseminate it. It’s impossible to present it to the professor in real time, in a format which they can use it.”

While connected technologies are an enabler of this approach, just as important is the knowledge of and will to implement better approaches to learning. “We make sure that 100% of our classes are fully active,” says Nelson. “What does fully active mean? It means that our professors aren’t allowed to talk for more than four minutes at a time. Their lesson plans are structured in such a way that the professor’s job is really to facilitate novel application from students on what they have studied … and actually use class time to further the intellectual development of the students. We do this because we’re able to do this because we’ve built an entirely new learning environment.” Two years after the end of an active learning class, students retain 70% of the information, in contrast with a mere 10% retention rate for lecture and test based classes. “Minerva courses are extremely engaging,” says Nelson. “They’re very intensive. They’re integrative, in the sense that you bring together different areas and fields together, and they’re effective.

Minerva’s educational environment and classes are conducted entirely online via live video. “All of the students live together, but the professors are all over the world. We hire professors to be on our staff full time, but we don’t let geography constrain them, which is another beauty of having a platform that enables close interaction between professor and student. That allows for two things. It allows for our professors to be the best in the world in teaching their subjects, and it enables the students to change their location. That’s why, at Minerva, students live in seven different countries by the time they graduate, because they don’t have to take the faculty with them. The faculty is accessible anywhere you are in the world. It gives our students the opportunity to both have a very deep formal education, as well as the ability to apply that in multiple contexts in the real world.”

From Active Learning to Augmented Reality

Virtual and augmented reality are emerging technologies that have exciting educational applications — ones that align well with the idea of active learning espoused by Nelson and others. Stephen Anderson, a design education leader who is Head of Practice Development at Capital One, sees emerging tech like augmented reality as the next step to creating an environment of continual learning and positive feedback loops.

Figure 03: VIrtual reality has the potential to immerse us in new learning environments and help us cultivate our sense of curiosity. [Photo: by Scott Web on Unsplash ]

“We have this progression based approach where we move kids through the same grade levels at the same age, expect them to learn the same material. And it’s very industrial or organizationally oriented. We will treat all learners as the same. Move them through and they’ll graduate by this age with this amount of knowledge and oh, by the way, they have to cover this material in this year. And I understand why we’ve arrived at that model because it’s an easy one to scale,” says Anderson. “But we know it’s not effective, right? It’s not the best way to teach people. And the best way to teach people is nothing new. It’s been around since at least Maria Montessori in the late ’80s, ’90s, where it’s much more about the learner. It’s much more about cultivating a sense of curiosity about the world [as well as an] interest in learning and teaching yourself. You see it play out in Montessori programs around the world … their offshoots or various things like the Waldorf school, where it’s almost at the other extreme—where there’s not a concern with being comprehensive and covering all the concepts. It’s more about hands on, active engagement with things, encouraging or project based, inquiry based, all these things.”

Anderson gives us an active learning example demonstrating how augmented reality might help us learn in a hands on way, in a wide variety of contexts — even in day-to-day tasks like cooking in our homes. “… As prices come down in cameras and projectors, imagine if every light bulb in our house could project onto a surface and also see interactions. So, now virtually all surfaces become interactive. So, you can be [in the kitchen] at your cutting board and cutting something and getting feedback [from the learning system] around like this slice of meat should be a little bit thinner or thicker. … You see these timeless ideas of feedback loops and interactivity and playfulness,” says Anderson.

Nelson too sees realities and future trends that will emerge, but aren’t here yet, as he talks about emerging technology and Minerva: “I believe that some of the real opportunities in the future are going to be where augmented reality will effectively replace the need to be on a have a laptop type interface. I could imagine augmented reality where you have a classroom of students and a professor in one place, or where you have actually 30 students in 30 different parts of the world having an immersive real life experience with a professor with the data overlay. I think when you have the opportunity for education to remove boundaries and constraints, you can all of a sudden think very differently about what the nature of what education should be. That empowers humans to come up with solutions that are far, far, far more advanced that what most universities are currently doing.”

下载开源日报APP:

https://opensourcedaily.org/2579/

加入我们:

https://opensourcedaily.org/about/join/

关注我们:

https://opensourcedaily.org/about/love/